Human-computer interaction (HCI) is field that focuses on the design and use of computer technology. It particularly concerns the interfaces between people (users) and computers. As artificial intelligence (AI) systems become more prevalent. Designing effective and intuitive interfaces for these systems is increasingly important. These interfaces must not only facilitate smooth interactions. But also ensure that users can understand and trust the AI systems they are using. This article explores the principles challenges and strategies involved in designing interfaces for AI systems. Emphasizing the need for user-centric approaches in the rapidly evolving landscape of AI technology.

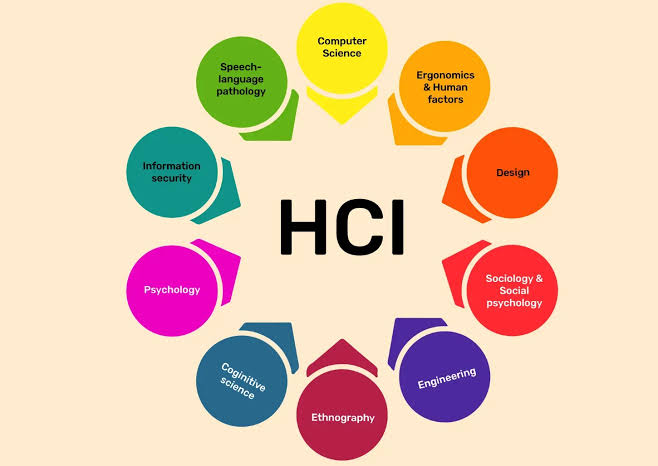

Human-computer interaction is multidisciplinary field drawing from computer science cognitive psychology, design and other areas. It seeks to understand how people interact with computers and develop technologies. These advancements enable effective and efficient interaction. The advent of AI expanded HCI to address unique challenges. Opportunities presented by intelligent systems are significant.

The goal of HCI in the context of AI is to create interfaces. These interfaces must be functional and user-friendly. They need to be intuitive. The design should bridge the gap between complex AI algorithms and the users. Users may not have technical expertise. They still need to interact with these systems for various tasks.

Designing interfaces for AI systems involves several key principles. These principles ensure usability. Transparency and user satisfaction are also crucial.

User-Centered Design

User-centered design (UCD) is a process that focuses on the needs, preferences, and limitations of end-users at every stage of the design process. In the context of AI, UCD involves understanding how users interact with AI systems, what tasks they need to accomplish, and what challenges they face. By involving users in the design process through techniques such as interviews, surveys, and usability testing, designers can create interfaces that are tailored to user needs and expectations.

Transparency and Explainability

One of the major challenges in designing AI interfaces is ensuring that users can understand and trust the AI's decisions and actions. Transparency involves making the AI system's processes and outcomes visible and comprehensible to users. Explainability goes a step further by providing clear and understandable explanations of how the AI arrived at a particular decision or recommendation. This is particularly important in high-stakes domains such as healthcare, finance, and legal systems, where users need to trust the AI to make informed decisions.

Affordance and Feedback

Affordance refers to the design elements that indicate how an object should be used. In AI interfaces, affordances help users understand how to interact with the system, such as which buttons to press or which commands to issue. Feedback, on the other hand, provides users with information about the results of their actions. Effective feedback helps users understand the consequences of their interactions with the AI system and can guide them in making subsequent decisions.

Personalization and Adaptivity

AI systems have the capability to learn from user interactions and adapt their behavior to better meet user needs. Personalization involves tailoring the interface and the system's responses based on individual user preferences and behaviors. Adaptive systems can adjust their functionality and presentation in real-time, providing a more efficient and satisfying user experience. For example, a personalized AI assistant might learn a user's schedule and preferences to provide more relevant reminders and suggestions.

Challenges in Designing AI Interfaces

Despite the potential benefits, designing interfaces for AI systems presents several challenges that need to be addressed to ensure effective user interaction.

Complexity and Usability

AI systems often involve complex algorithms and data processing, which can make it difficult to design simple and intuitive interfaces. The challenge is to abstract this complexity in a way that users can easily understand and interact with the system without being overwhelmed. Striking the right balance between functionality and simplicity is crucial for usability.

Trust and Reliance

Trust is a critical factor in the adoption and use of AI systems. Users need to trust that the AI is reliable, accurate, and acting in their best interest. Building this trust involves ensuring transparency, providing explanations for AI decisions, and allowing users to retain control over the system's actions. Over-reliance on AI can also be a concern, as users might become too dependent on the system and fail to critically assess its outputs.

Ethical Considerations

AI systems can have significant ethical implications, particularly concerning privacy, bias, and fairness. Designing ethical AI interfaces involves ensuring that user data is handled responsibly, that the system is free from discriminatory biases, and that the AI's actions are fair and just. Designers must consider these ethical aspects and incorporate safeguards to protect users' rights and interests.

Strategies for Effective AI Interface Design

To address these challenges and create effective AI interfaces, several strategies can be employed.

Iterative Design and Testing

Iterative design involves repeatedly refining the interface based on user feedback and testing. By conducting usability tests with real users, designers can identify issues and make improvements before the system is widely deployed. This approach helps ensure that the final product is user-friendly and meets the needs of its intended audience.

Multimodal Interaction

Multimodal interaction involves using multiple modes of communication, such as voice, text, gestures, and visual cues, to interact with the AI system. This approach allows users to choose the mode that is most convenient and intuitive for them, enhancing the overall user experience. For instance, a smart home assistant might support both voice commands and a mobile app interface, providing flexibility in how users interact with the system.

Context-Aware Interfaces

Context-aware interfaces adjust their behavior based on the context in which they are used. This involves understanding the user's environment, preferences, and current tasks to provide relevant and timely information and actions. For example, a context-aware navigation system might adjust its route recommendations based on real-time traffic conditions and the user's driving preferences.

Education and Training

Providing users with education and training on how to effectively use AI systems can enhance their understanding and confidence. This can include tutorials, user guides, and in-app assistance that help users learn about the system's capabilities and how to interact with it. By empowering users with knowledge, designers can facilitate smoother and more effective interactions with AI systems.

Conclusion

Human-computer interaction plays a critical role in the design and use of AI systems, ensuring that these technologies are accessible, understandable, and trustworthy for users. By adhering to principles such as user-centered design, transparency, and personalization, and by addressing challenges related to complexity, trust, and ethics, designers can create AI interfaces that enhance user experience and foster positive interactions. As AI continues to evolve, the field of HCI will remain essential in bridging the gap between advanced technology and human users, enabling the seamless integration of AI into everyday life.